Finding the length of a string in JavaScript is simple, you use the .length property and that’s it, right?

Not so fast. The “length” of a string may not be exactly what you expect. It turns out that the string length property is the number of code units in the string, and not the number of characters (or more specifically graphemes) as we might expect. For example; "😃" has a length of 2, and "👱♂️" has a length of 5!

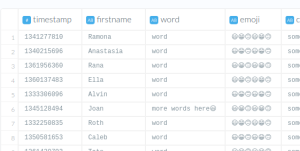

Screenshot from Etleap’s data wrangler where the column width depends on the column contents.

In our application we have a data wrangler that lets you view a sample of your data in a tabular format. Since this table supports infinite scrolling, both rows and columns are rendered on demand as you scroll vertically or horizontally. We can’t render all the rows and columns at once since a table could easily include more than a hundred thousand cells, which would bring the browser to its knees.

“The ‘length’ of a string may not be exactly what you expect.”

Imagine if most rows of a column contains a small amount of data, such as a single word, but a single row contains more data, such as a sentence. If this row is outside of the currently viewed area we don’t want the column to expand as you scroll down, and we definitely don’t want to cram the sentence into the same small space that’s required by the word. This means that we need to find the widest cell in the column before rendering all the cells. It’s fast and straightforward to find the length of the content in each cell, however what if the cell contains emojis or other content where we can’t rely on the length property to give us an accurate value?

Code units vs. code points

Let’s do a quick Unicode recap. Each character in Unicode is identified by a unique code point represented by a number between 0 and 10FFFF. Unfortunately, 10FFFF is a large number and requires 4 bytes to represent. To prevent having to allocate 4 bytes for each character, Unicode also specifies different encoding standards that can be used to interpret it, including UTF-16 which is the internal string encoding used by JavaScript.

UTF-16 is a variable length encoding, which means that it uses either 2 or 4 bytes for each code point depending on what is required. To differentiate, we say that UTF-16 uses one or two code units to represent one Unicode code point. The most used characters all fit into one code unit, however some of the more exotic characters, such as emojis, require two code units.

“It turns out that code points are not the only caveat regarding string lengths in JavaScript.”

This is where a problem arises. Since the .length property returns the number of code units, and not the number of code points, it does not directly map to what you may expect. As an example, the emoji "☺️" has a length of 2, even though it looks like only one character.

How can we work around this? ES2015 introduced ways of splitting a string into its respective code points by providing a string iterator. Both Array.from and the spread operator […string] uses this internally so both can be used to get the length of a string in code points.

Combining Characters

It turns out that code points are not the only caveat regarding string lengths in JavaScript. Another is combining characters. A combining character is a character that doesn’t stand on its own, but rather modifies the other characters around it. This is supported in Unicode, meaning that characters such as “è” is actually made up of two code points, “e” and “\u0300”.such as "👱♂️" which is a combination of " 👱" and " ♂" with a zero width joiner (\uDC71) in between.

Working around this is more complicated. Currently there is no built in way of reliably counting graphemes in JavaScript. A current stage 2 proposal suggests adding Intl.Segmenter which will return the number of graphemes in a string, however there’s no guarantee that it will make it into the spec (there’s a polyfill for the proposal if you’re desperate.)

Environment Specific Differences

Did you know there’s a ninja cat emoji? Neither did we, because it’s a Windows-only emoji! It’s represented by a combination of "🐱" and "👤". This means that Windows users will see this combination as one character, while other users will see it as two characters. Depending on the users choice of fonts, they could even see something completely different. You could try to prevent this issue by choosing a specific font for your web app, however that won’t be sufficient as the browser will still search through other fonts on your system if a character is not available in your chosen font.

“The various environment specific differences means that there’s generally no way of measuring the rendered width of a string mathematically."

Checkmate?

The various environment specific differences means that there’s generally no way of measuring the rendered width of a string mathematically. Therefore, the only way to determine the pixel length is to render it and measure. For our use case in the wrangler, this is exactly what we wanted to avoid in the first place. However there are some optimizations that we can make.

Instead of rendering all the strings in each column, we can split the strings into their corresponding graphemes and render them individually. This allows us to cache the pixel length of each grapheme we encounter. Since there are substantially fewer graphemes than unique strings in a table, this results in a significant reduction in total rendering. This way we can easily determine the correct width of a column, all while keeping the scrolling snappy and your browser happy.